Ads in ChatGPT will disguise marketing as knowledge

No matter what Sam Altman has said about ChatGPT never showing ads, it was always inevitable. Every major media or content company follows the same playbook.

First you provide an impossible amount of free knowledge. Then you get as many people as possible to build a habit of using your service. Then you split the users into free and paid tiers - the free users get ads, the paid users do not.

Most people don't mind the ads, some even enjoy them. As consumers, we like to buy stuff, so why wouldn't we want recommended products based on our unique persona?

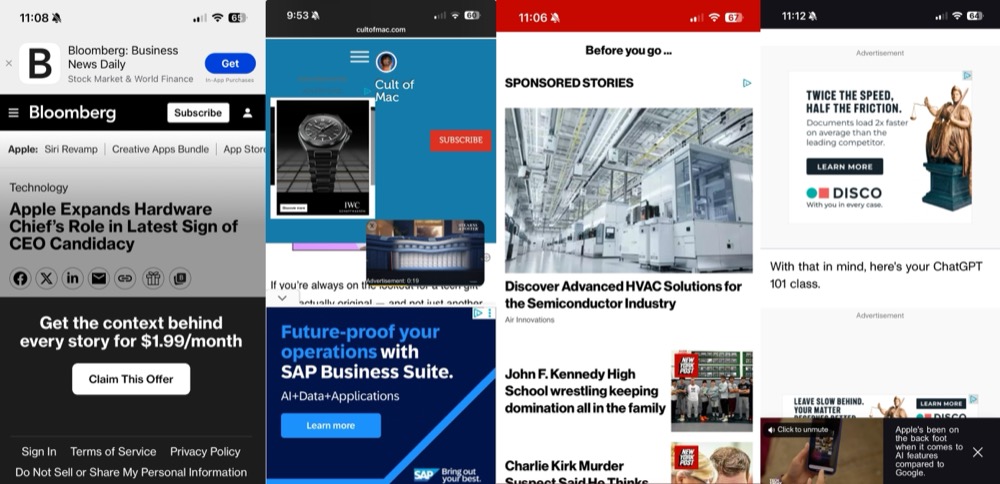

The problem isn't the ads. We can live with floating ad elements around content. No matter how annoying they are. And most are insanely annoying, at least when on a phone-sized screen.

No, the problem isn't the ads, the problem is when the ads get folded into the content, the knowledge itself. When paid advertisements are disguised as objective truth.

So no matter what Sam Altman says today, about how ads will look, where they'll be placed, when they'll show up, and what content they'll carry, there will come a time when the ads will blend into the content.

And that is going to both a boon for businesses, and a huge threat to knowledge itself.

Sponsored Content was always a game of disguise

In the days of print media, sponsored content would try to look as close to the real thing as possible. The fonts, colors, or layout would differ slightly, but the goal was always to blend in.

Magazines still have entire sponsored sections, like that issue of your state's monthly magazine featuring 8 pages dedicated to dental health. But now the model has flipped, and every town has a monthly magazine that's built around local business features. So in a way the whole magazine itself is disguised as legitimate content.

I don't mind the ad supplements or even the whole business-promotion model. Because it's still pretty clear when you're reading news, editorials, and features - verses marketing copy.

But when YouTube became the dominant media, the distinction between sharing knowledge and promoting products became a gray area that opened the flood gates.

YouTube is a free for fall for hidden agenda

Outside of print, ads have always been a distinctly separate object from the radio program, TV show, movie, or streaming series. Of course there have been ways to appease sponsor interests in any medium - including TV news - but in general you're not getting news that's been written by ad clients.

There are exceptions of course, like commercial owners who have a say in the editorial content of broadcast news. Corporate interests will always have leverage.

With YouTube, however, anything goes. One channel might disclose their sponsored content, or whether they partnered with a company to produce a video, while another channel has no shame in the shill. Youtube has an optional 'paid promotion' disclosure that creators can add to videos, but it's not enforced.

This is where knowledge-for-sale has really become an issue, in my opinion. YouTubers wield a ton of influence, and because most are hobbyists turned independent businesses, there's always a need to financially support the channel production and time.

I can get behind 'ReviewTubers' promoting products they've been asked to review. That's the game. But when a large channel with influence proclaims an opinion that is secretly paid for... well, isn't that how 'fake news' ruined the whole idea of a paper of record, or truth.

Wikipedia has checks and balances, but ChatGPT ads will not

But influence isn't a new phenomenon, so even when it comes to our collective encyclopedia - Wikipedia. There are plenty of ways to shift the perceived knowledge source toward a commercial interest.

Wikipedia famously has a legion of volunteer editors who work independently of each other, a sort of global system of checks and balances, ensuring that no one editor can sneak in a reference to a paid link, for example. Adding references to a specific company in a Wikipedia entrance is one thing, but a backlink from a Wikipedia article is gold, as any SEO professional will tell you.

The core model of Wikipedia is to cite publications, and that is somewhat flawed because the ability to publish has always been reserved for those with power. A book published 200 years ago carries a lot of weight as a Wikipedia source, but 200 years ago only a few individuals could afford to publish a book. This article really lays out the issue with published sources.

But there's at least a system, with human judgement, reviews, lots of eye balls. When you can sneak a link into a ChatGPT response by sending OpenAI some money, you'll get to modify our new encyclopedia to your will. And no one will be able to say wait a minute, is that even correct? Because hey, AI hallucinates so we can't be responsible.

So how will ads in ChatGPT look like, short and long term?

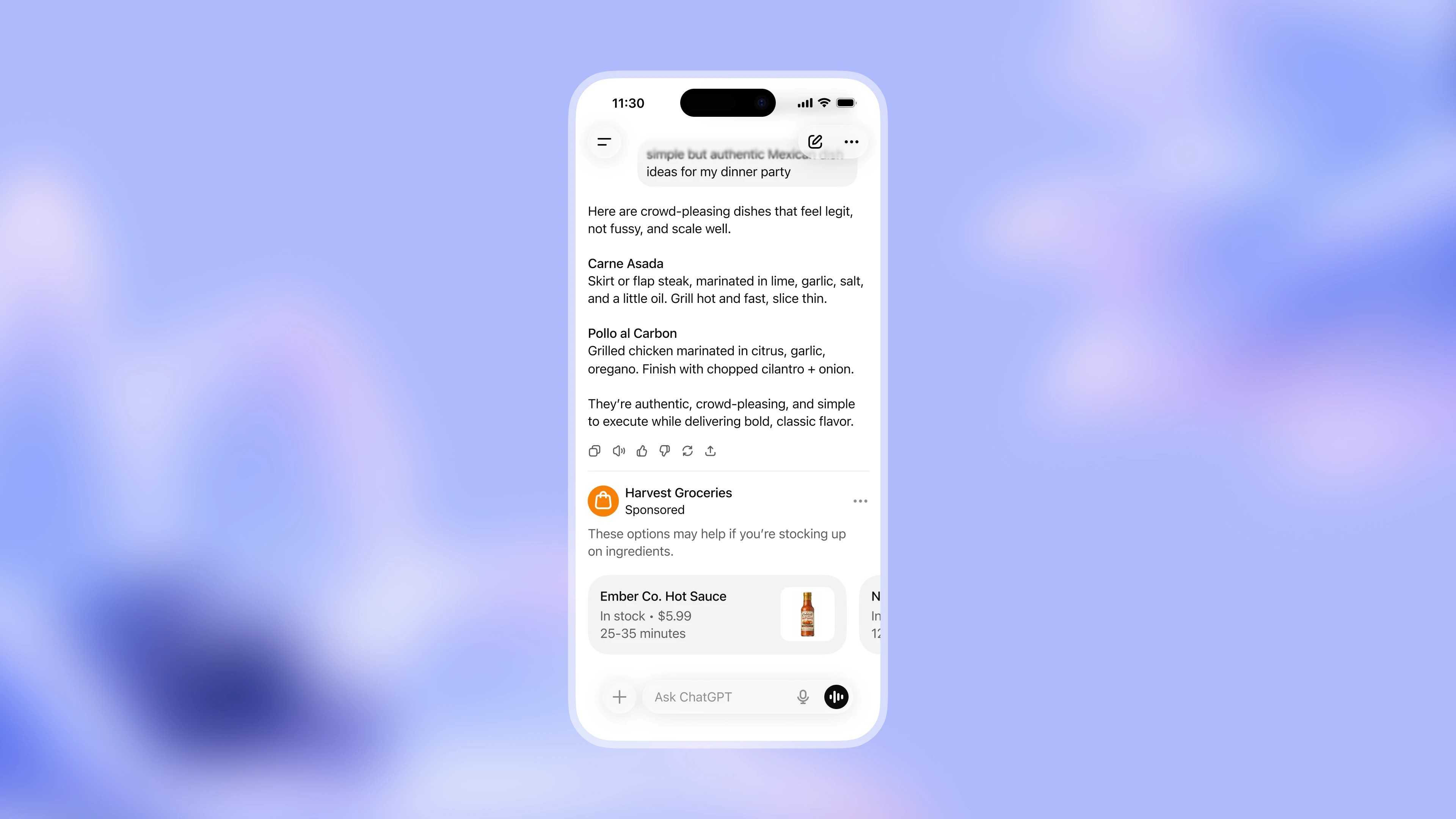

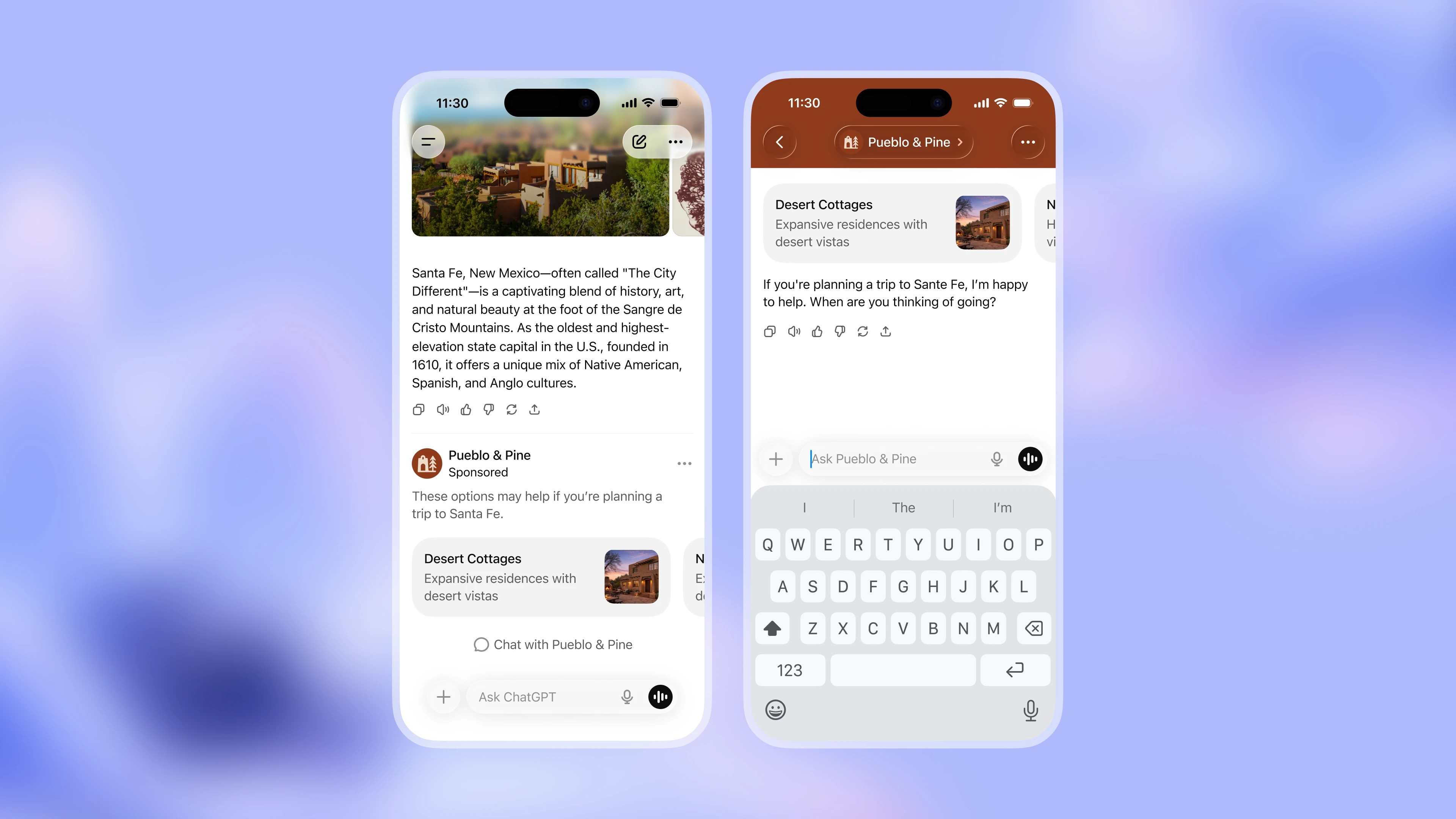

At the moment, OpenAI promises their ads will be limited to free tier and bottom tier subscribers, the ads will be positioned separately from the main chat, and there will be an option to close them.

But I don't think it will take long before the ads are rolled into the chat content. Perplexity has experimented with a variety of formats, like sponsored follow up questions (see Why we're experimenting with advertising)

With Google ads and SEO, the algorithm could favor any paying advertisor for SERP ranking, but as a user you would still end up visiting a website where you could make your own informed decision on whether that website was trustworthy or not.

But when we're dealing with a knowledge engine like ChatGPT, even with hallucinations, the expectation is that you're receiving information from a state of the art computer trained on billions of data, rather than a paying advertiser who wants to promote a blender.

So now when a business can skew the chat result to favor their own interests, it starts to erode any faith in the knowledge engine. Well ideally it would. What will likely happen is people will continue to trust in the engine for all of their information, while in the background the promotional machine will twist the information into whatever they desire.

Maybe I'm too pessimistic, maybe OpenAI will really come through with an elegant ad solution that both solves their financial needs while continuing to provide a pure ChatGPT experience to everyone who has grown to rely on it.

But something tells me OpenAI wants become a bigger ad-supported behemoth than Meta and Google combined, and the way to get there isn't through banner ads.